As part of this year’s Design Automation Conference, I participated on the panel “Architecture, IP, or CAD: What’s Your Pick for SoC Security?”. That’s a bunch of acronyms and buzzwords related to the question of how to build more secure computer chips. DAC is one of the oldest, largest and most prestigious conferences in electronics design. It was also the first big research conference that I attended; I went to DAC in New Orleans in 1999 as an undergraduate (which was an eye opening experience in many regards), so I guess this was my 20th DAC anniversary.

As part of this year’s Design Automation Conference, I participated on the panel “Architecture, IP, or CAD: What’s Your Pick for SoC Security?”. That’s a bunch of acronyms and buzzwords related to the question of how to build more secure computer chips. DAC is one of the oldest, largest and most prestigious conferences in electronics design. It was also the first big research conference that I attended; I went to DAC in New Orleans in 1999 as an undergraduate (which was an eye opening experience in many regards), so I guess this was my 20th DAC anniversary.

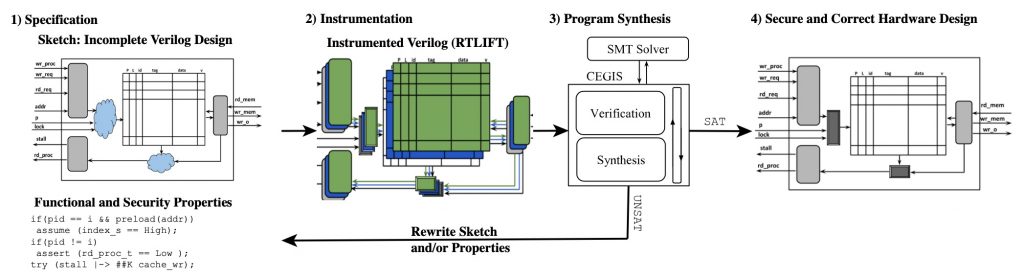

I’ve been doing research in the hardware security space for a while now (more than 15 years!). I’ve seen this community grow from a niche academic community into a major focus at DAC (there were security sessions almost non-stop this year). And it was nice to see more hardware security companies on the floor including the amazing Tortuga Logic (full disclosure: I am a co-founder). Security clearly has become a major research and market push for the semiconductor and EDA industries.

I was the “academic” on this panel with two folks from industry — Eric Peeters from Texas Instruments and Yervant Zorian from Synopsys. Serge Leef from DARPA was the other panelist. Serge just went to DARPA from Mentor Graphics and is looking to spend a lot of our taxpayers money on hardware security. A very wise investment in my totally impartial opinion. I’m guessing that most of the audience was there to hear what Serge had to say and to see if any money fell out of his pockets as he left the room.

The panel started with short (5 min) presentations from each panelist and then there was a lot of time for Q&A from the moderator (the great Swarup Bhunia) and the audience.

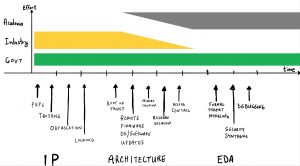

My presentation talking points focused on how academics, industry, and government should interact in this space. My answer: industry and government should give lots of funding for academic research (again, I’m totally not biased here…). I also argued that there really isn’t all that much interesting research left in hardware IP security, which I defined as Trojans, PUFs, obfuscation, and locking. Finally, I gave some research areas that are more interesting for research including formalizing threat models and figuring out how to debug hardware security vulnerabilities. Both are no small tasks, and my research group is making strides in both.

During the open discussion there were many other interesting points related to industry’s main interests (root of trust, not Trojans, …), the number of hardware vulnerabilities there are in the wild, metrics, hardware security lifecycle, and so on.

It was a quick visit Vegas (~1 day), but you brought back some good memories, gave me some great food, and didn’t take too much of my money. All and all, a successful trip.

-Ryan