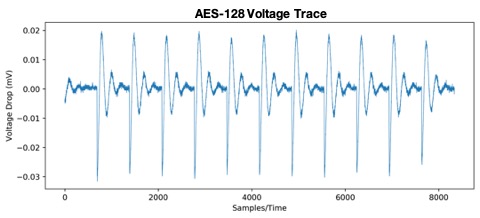

It is surprisingly easy to extract critical information from a computer chip by simply monitoring the amount of power that it consumes over time. These power side channels have been used time and time again to break otherwise secure cryptographic algorithms. Countless mitigation strategies have been used to thwart these attacks. Their effectiveness is difficult to measure since vulnerability metrics do not adequately consider leakage in a comprehensive manner. In particular, metrics typically focus on single instances in time, i.e., specific attack points, which severely underestimate information leakage especially when considering emerging attacks that target multiple places in the power consumption trace.

We developed a multidimensional metric that addresses these flaws and enables hardware designers to quickly and more effectively understand how the hardware that they develop is resistant to power side channel attacks. Our metric considers all points in time of the power trace, without assuming an underlying model of computation or leakage. This will enable the development of more secure hardware that is resilient to power side channel attacks. This work was recently published at the International Conference on Computer Aided Design (ICCAD), one of the premier forums for technical innovations in electronic design automation.

For further information see: Alric Althoff, Jeremy Blackstone, and Ryan Kastner, “Holistic Power Side-Channel Leakage Assessment: Towards a Robust Multidimensional Metric“, International Conference on Computer Aided Design (ICCAD), November 2019 (pdf)